Key Takeaway:

Research demonstrates that AI learning agents focused on knowledge access and conversational interfaces deliver measurable improvements in learning outcomes, while AI content generation tools show no correlation with improved retention or performance. This analysis examines five leading platforms and identifies the characteristics that distinguish effective agentic learning from superficial efficiency gains.

Executive Summary

The corporate learning landscape faces unprecedented pressure. The World Economic Forum predicts that 1.4 billion people will need reskilling within three years.

Meanwhile, cognitive research reveals a hidden cost: frequent context switching erodes up to 40% of worker productivity. Traditional learning management systems compound these challenges by burying content and forcing learners to navigate multiple disconnected tools.

AI learning agents represent a fundamental shift in how organizations deliver training. These systems use large language models and retrieval-augmented generation to interpret natural language questions, retrieve relevant information from approved content, and deliver personalized answers within seconds.

Early implementations show dramatic results. Organizations report 300-500% ROI in the first year, 50-80% reduction in time to find information, and 70-80% knowledge retention after 30 days.

This research examines the current state of AI learning agents. We analyze the underlying technologies, evaluate five leading platforms, and present evidence on what differentiates effective implementations from marketing hype.

Our findings suggest that the strategic approach to AI integration matters more than the technology itself.

Understanding AI Learning Agents

AI learning agents for L&D are autonomous software programs designed specifically to deliver learning and development outcomes. Unlike traditional generative AI tools that simply assist with content creation, these agents function as digital deputies that take action autonomously to improve learning effectiveness and organizational productivity.

In the learning and development context, these agents perform several critical functions. They integrate corporate content repositories—including courses, policies, videos, and documents—and observe user behavior patterns to deliver personalized guidance.

The technology combines large language models (LLMs) with retrieval-augmented generation to understand natural language questions, fetch information from approved sources, and deliver role-specific answers within seconds.

What distinguishes AI learning agents from traditional learning technology is their autonomous nature. They make real-time decisions about what information to surface, how to personalize responses based on individual roles and learning history, and when to provide follow-up recommendations. Modern implementations use conversational interfaces that ask clarifying questions and record interactions for analytics purposes, functioning as 24/7 mentors that gather insights to inform L&D strategy.

The key differentiation: while traditional AI tools assist human effort, AI learning agents act independently on behalf of learners—retrieving knowledge, personalizing experiences, and automating learning workflows without constant human oversight.

Traditional Chatbots vs AI Learning Agents

Traditional chatbots follow predetermined scripts. They often deliver generic or inaccurate responses because they lack contextual understanding.

Modern AI learning agents represent a significant advance. They analyze organizational content—courses, policies, videos, documents—to answer role-specific questions. They personalize interactions based on user role, learning history, and current skill needs.

Transparency is another distinguishing feature. Each response links back to approved documents, ensuring traceability and reducing AI hallucinations.

The conversation happens within existing tools like Slack, Microsoft Teams, or SMS, eliminating the need to switch contexts.

Advanced agents extend beyond simple Q&A. They automate administrative workflows, generate reports, and handle routine tasks. This positions them as 24/7 mentors that deliver just-in-time knowledge while gathering analytics to inform L&D strategy.

The Role of Microlearning in Conversational AI

Research on microlearning reveals why conversational delivery matters. Bite-sized lessons delivered through messaging apps dramatically outperform traditional eLearning.

Arist's 2025 benchmark study provides compelling evidence. Corporate training modules delivered via Slack, Teams, or SMS achieve 80-90% completion rates compared with only 15-20% for traditional eLearning.

The retention data is even more striking. Knowledge retention after 30 days reaches 70-80%—more than double the 20-30% achieved by conventional courses.

Time-to-competency improves substantially. Microlearning reduces learning curves from 8-12 weeks to 2-3 weeks. Development time and costs drop by 70-85%.

This approach aligns with cognitive science. Humans retain a limited number of concepts in short-term memory. Learning proves most effective when information is spaced over time rather than compressed into marathon sessions.

By combining AI agents with microlearning principles, organizations provide on-demand coaching that respects cognitive limits, encourages active engagement, and reinforces knowledge when it matters most.

Two Approaches to AI in Learning

The market has produced two distinct strategies for applying AI to corporate learning. The differences between them have significant implications for learning outcomes.

Approach One: Content Generation

The first strategy focuses on accelerating course creation. These tools use generative AI to produce SCORM modules, videos, and assessments faster than human teams.

The promise is compelling: reduce production time from weeks to hours. Lower content creation costs dramatically. Fill learning libraries quickly with fresh material.

However, research reveals four critical limitations.

1. Hidden operational costs emerge quickly.

Generative tools automate first-draft creation, but they don't eliminate work—they shift it. L&D teams still invest significant effort validating accuracy, aligning with organizational priorities, ensuring accessibility and compliance, and maintaining content currency. This oversight erodes much of the promised efficiency gain.

2. ROI remains elusive.

McKinsey's State of AI 2024 report highlights a critical gap. Many HR and content teams deploy generative AI tools, yet few can demonstrate measurable ROI tied to actual outcomes. Time saved on production doesn't translate into improved learner performance, retention, or business impact. For L&D leaders under pressure to prove value, "faster content" without measurable outcomes represents a hollow victory.

3. Pedagogical depth suffers.

Research on auto-generated learning materials shows that learners consume them passively. Generative tools excel at producing words and visuals. They struggle to embed proven learning science methods like retrieval practice, scaffolding, or spaced reinforcement. Without these elements, learners may complete modules but fail to retain or apply knowledge—undermining the entire purpose of training.

4. Scale doesn't equal success.

Training Industry's 2024 analysis emphasizes this point. While generative tools rapidly scale production, scale alone doesn't determine effectiveness. What matters: learner engagement, alignment with business needs, and measurable transfer of knowledge into performance. Generative content may fill LMS libraries faster, but without deeper design and engagement strategies, it risks becoming "content for content's sake."

Approach Two: Agentic Learning

The second strategy takes a fundamentally different direction. It focuses on building a knowledge layer and providing natural-language access to existing information.

Rather than producing more content, agentic learning makes current knowledge more accessible, contextual, and actionable. This directly addresses the problem most organizations face: not too little content, but too much content that's poorly organized and hard to find.

This approach delivers efficiency in two ways.

First, once an agentic knowledge layer exists, it requires no additional manual effort to sustain engagement. Learners interact dynamically with the agent, not static content libraries. The system scales without proportional increases in overhead.

Second, it improves actual learning outcomes. Conversational agents scaffold understanding, provide retrieval practice, and adapt to learner needs. Research demonstrates that pedagogical agents measurably improve self-directed learning outcomes.

McKinsey's analysis supports this finding. Embedding AI directly in workflows—rather than as a separate tool—reduces friction and enhances uptake. This principle reaches full realization in agentic learning systems.

Comparative Evidence

The table below summarizes key differences:

This evidence suggests organizations should evaluate AI learning tools based on outcome metrics, not production speed.

Conversational Learning as a Framework

Conversational Learning™ represents a specific implementation of agentic learning principles. The framework emphasizes AI-powered, just-in-time learning through natural conversation inside existing work tools.

The approach contrasts sharply with traditional eLearning in five ways.

On-demand delivery.

Employees ask questions in Slack, Teams, or SMS. An AI agent interprets intent and retrieves relevant knowledge instantly. No scheduled courses. No waiting for the next training session.

Personalized responses.

Answers incorporate the user's role, prior learning, and current context. Generic content is replaced with specifically relevant information tailored to individual needs.

Measurable learning events.

Each question becomes a trackable data point. Analytics reveal what people ask, identify knowledge gaps, and measure performance improvements. This turns informal learning into quantifiable outcomes.

Elimination of context switching.

Workers stay in their workflow. They don't leave Slack or Teams to dig through courses—a practice that wastes up to 40% of productive time. Learning happens where work happens.

Native tool integration.

The learning experience embeds directly into Slack, Teams, SMS, and platform interfaces. This aligns with Josh Bersin's principle of "learning in the flow of work".

The Cost of Context Switching

Research on context switching reveals significant hidden costs. Harvard Business Review studies found that even brief mental blocks from switching tasks can consume up to 40% of productive time.

The cognitive toll extends beyond time. Continuous partial attention increases error rates. It elevates stress and frustration. Workers report feeling overwhelmed when forced to manage multiple disconnected systems.

By meeting learners in their existing environment, AI agents reduce cognitive load. This improves knowledge retention and application. It also boosts confidence—workers know help is available instantly without disrupting their workflow.

Conversational Learning™ delivers specific benefits:

- 50-80% reduction in search time for finding information

- Higher relevance as answers address specific problems, leading to better knowledge application

- 60% improvement in retention after 90 days because learning occurs when knowledge is needed

- Better employee experience from confidence that help is immediately available

Slack and Teams as Learning Platforms

The rise of Slack and Microsoft Teams as digital work hubs makes them natural platforms for conversational learning.

Slack statistics for 2025 show impressive adoption: 42 million daily active users across more than 215,000 organizations, with retention above 98%.

Teams using Slack report measurable productivity gains: 32% decrease in internal email, 23% increase in cross-functional collaboration, and 19% fewer meetings. Automated workflows save 5.6 hours per employee per week. Onboarding time drops by 27%, issues resolve 21% faster, and team satisfaction improves by 15%.

Microsoft Teams shows similar centrality. Roughly 80% of employees rely on Teams to collaborate, making it the most-used application in Microsoft 365. AI features like Copilot let users summarize conversations, rewrite responses, and access contextual prompts without leaving the environment.

The case for embedding learning in these tools strengthens when considering the alternative. Asana's 2025 Anatomy of Work Index found that workers switch between nine apps per day. 56% feel compelled to respond to notifications immediately, leading to overwhelm. 42% spend excessive time on email and 52% multitask during virtual meetings.

Integrating learning into Slack and Teams consolidates tools, reduces interruptions, and combats the negative effects of context switching.

Business Impact: The Evidence

Research on conversational learning implementations reveals substantial business benefits. These metrics come from organizations that have deployed AI learning agents at scale.

Financial returns reach 300-500% ROI in the first year, driven by efficiency gains and reduced training overhead.

Operational efficiency improves across multiple dimensions:

- 50-80% reduction in time to find information

- 30-60% decrease in help-desk tickets as employees self-serve answers

- 25-45% improvement in first-call resolution through real-time guidance

- 40-70% faster onboarding of new employees

Engagement metrics show dramatic improvements:

- 3-5× higher learner engagement compared with traditional training

- 90% user-satisfaction scores

- 80-90% completion rates versus 15-20% for traditional eLearning

Learning outcomes demonstrate the approach's effectiveness:

- 70-80% knowledge retention after 30 days, more than double the 20-30% retention of conventional courses

- 60-75% reduction in time to competency, shrinking learning curves from 8-12 weeks to 2-3 weeks

Cost reductions accumulate quickly:

- 75-90% reduction in training costs through shorter development cycles and efficient delivery

- $1,200-$3,000 per employee in annual productivity gains

These numbers demonstrate that conversational AI delivers measurable improvements in productivity, cost reduction, and employee satisfaction. For organizations required to prove L&D return on investment, such tangible benefits represent a fundamental shift in how learning contributes to business outcomes.

Why Organizations Implement AI Learning Agents

Analysis of implementations reveals five primary drivers.

1. Reducing context switching and boosting productivity.

Embedding learning into collaboration tools dramatically cuts app switching. Slack users report 32% reduction in internal emails and 19% fewer meetings, saving 5.6 hours per week. Teams keeps 80% of employees in a single workspace. Eliminating context switching boosts productivity by more than 40%.

2. Delivering personalized, just-in-time coaching.

Microlearning through messaging apps achieves 80-90% completion rates and 70-80% knowledge retention after 30 days. Agents assess roles and skills, then recommend targeted training. This accelerates time to competency to 2-3 weeks versus traditional timelines of 8-12 weeks.

3. Automating administrative and repetitive tasks.

AI agents handle scheduling, document generation, and routine communications. This frees managers and instructors to focus on higher-value work. Advanced platforms automate reporting and enrollment with 80% time savings. Others handle merge-tag updates and video time stamping.

4. Increasing engagement and collaboration.

Slack's conversational environment boosts cross-functional collaboration by 23% and improves team satisfaction by 15%. AI agents ask follow-up questions and provide interactive guidance, fostering active learning rather than passive consumption.

5. Delivering measurable ROI and cost savings.

Conversational learning yields 300-500% ROI in the first year. Microlearning reduces development costs by 75-90% and training time by 70-85%. Together, these improvements translate into annual productivity gains of $1,200-$3,000 per employee.

Analysis of Leading Platforms

Five platforms have emerged as leaders in the AI learning agent space. Each takes a different approach to implementing AI in corporate learning environments.

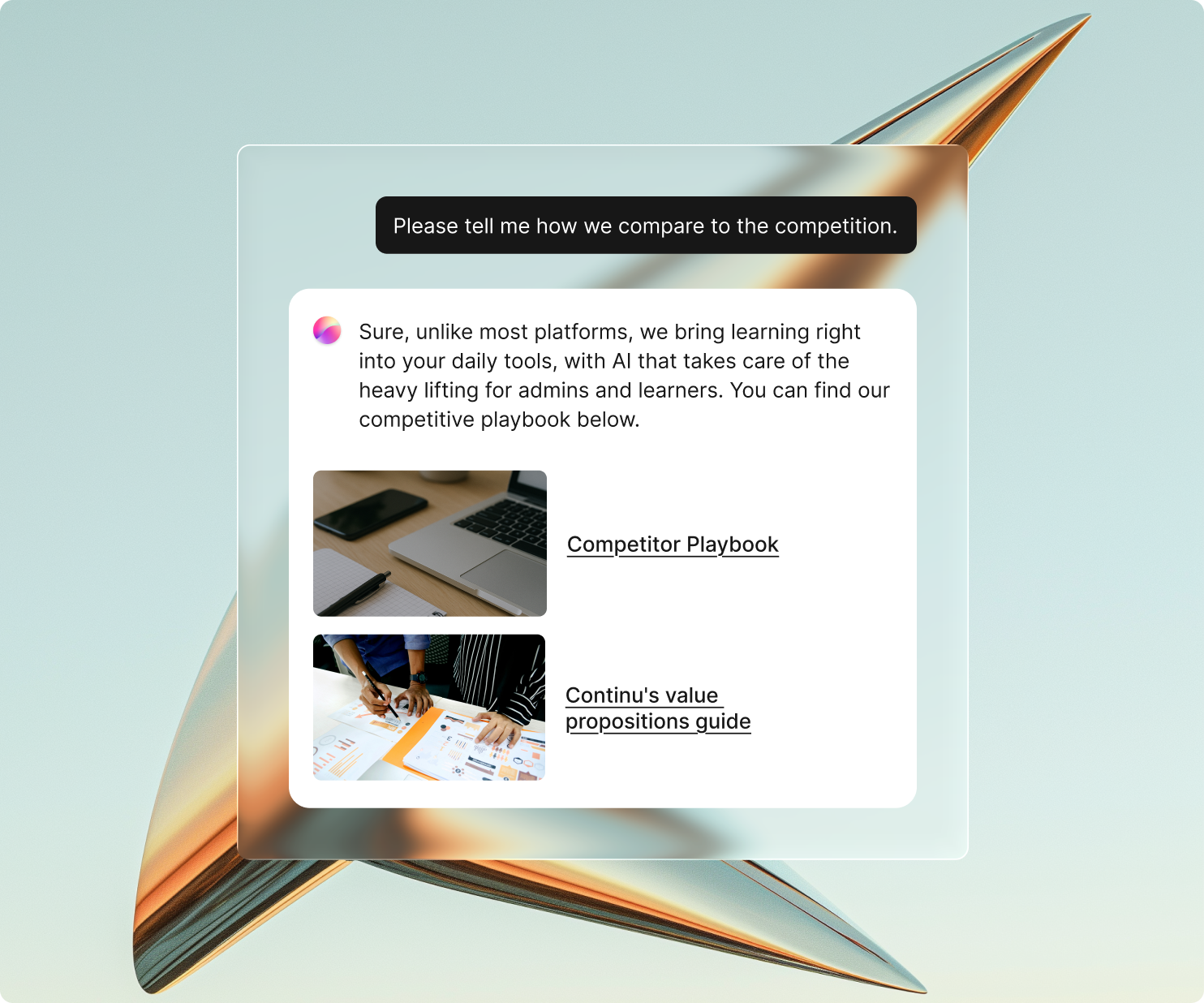

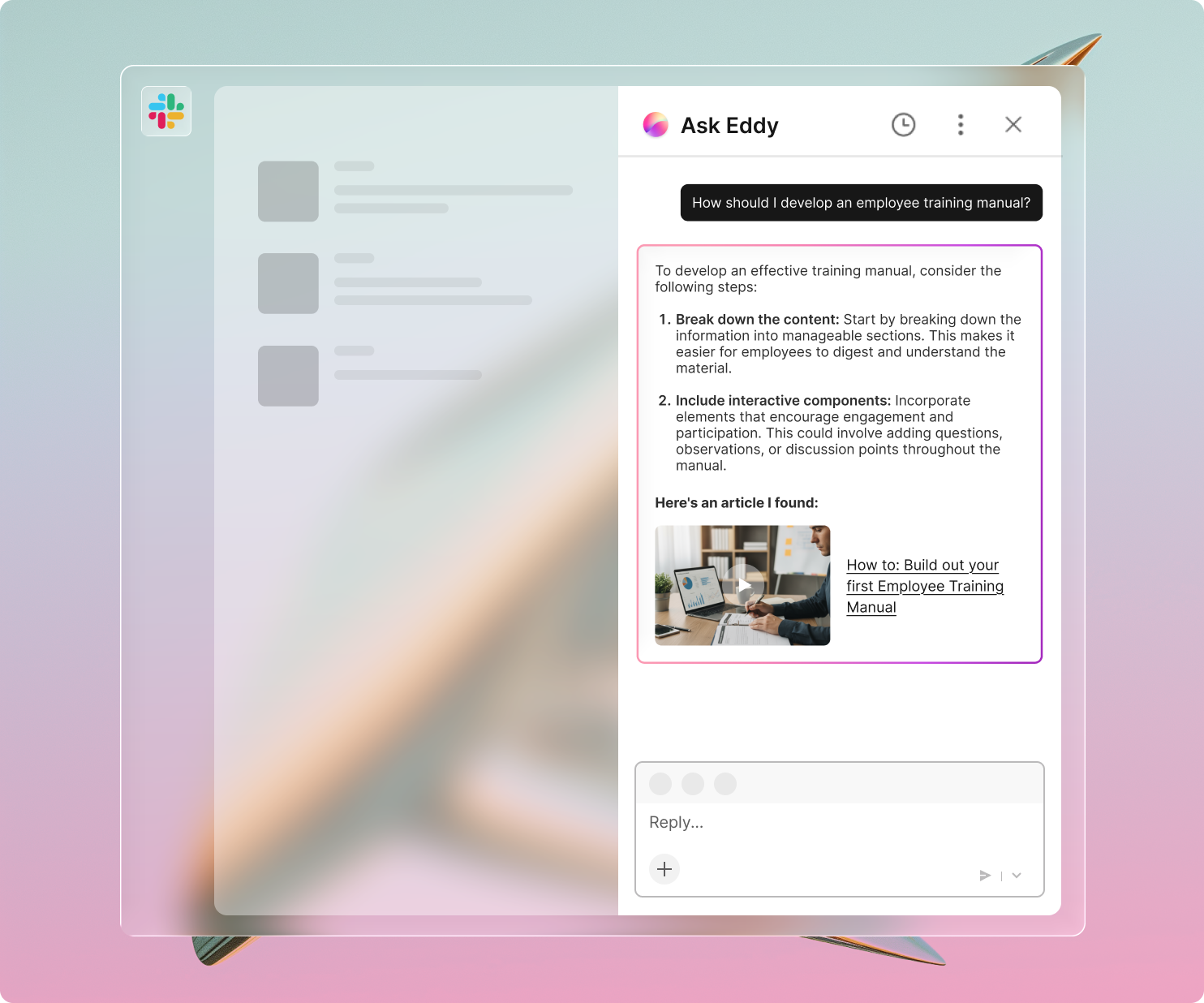

1. Eddy by Continu

Eddy distinguishes itself through its focus on flow-of-work learning. The AI Learning Agent was designed specifically to operate outside the traditional LMS environment.

Key characteristics:

The system works across multiple channels: Slack, Microsoft Teams, SMS, and in-app. Employees don't need to log into a separate platform. This eliminates the context switching that reduces productivity.

Smart Segmentation™ technology delivers role-appropriate answers. Learners see only content they're permitted to access, ensuring both security and relevance. The system maintains SOC-2 Type II and GDPR compliance through a closed-loop LLM that keeps content private.

The platform focuses exclusively on knowledge access rather than content generation. It uses existing organizational content, eliminating the need to build separate knowledge bases. All responses cite sources, preventing hallucinations.

Reported outcomes:

Organizations using Eddy report retention and performance improvements up to tenfold. Productivity increases by more than 40% through elimination of context switching. Financial returns reach 300-500% ROI in the first year.

Strategic approach:

Continu made an explicit decision not to include AI content generation features. This reflects their analysis that content creation tools don't improve learning outcomes. Instead, they focus resources on making existing knowledge instantly accessible and actionable.

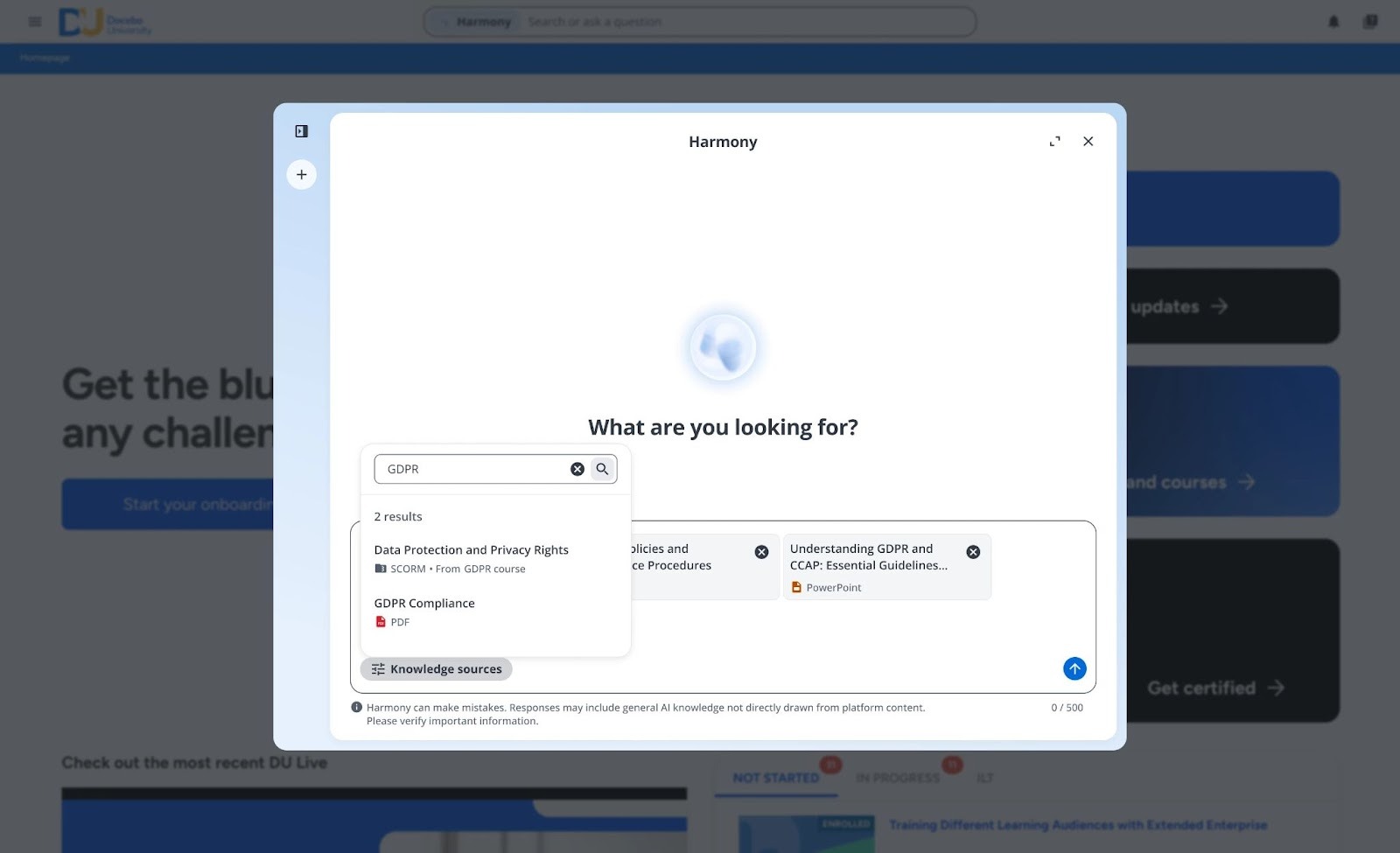

2. Harmony by Docebo

Harmony represents a fully embedded AI engine within the Docebo LMS. The system emphasizes automation and workflow integration.

Key characteristics:

Natural language search allows users to retrieve resources instantly, with reported time savings of 60-80%. The platform plans to add administrative automation that builds reports and enrolls users with a single prompt, estimating 80% time savings for admin work.

Future releases will include AI-generated outlines, podcasts, and videos to reduce content creation cycles. The roadmap also promises contextual guidance and pre-built AI agents for repetitive tasks.

Harmony integrates tightly into Docebo's platform. It understands page context and acts as a copilot for learners and administrators. The system includes enterprise-grade security with an AI control panel.

Limitations:

Harmony operates exclusively within the Docebo ecosystem. Learners must log into the LMS to access it. This doesn't fully eliminate context switching for users working primarily in other tools.

The platform's roadmap includes content generation features, which research suggests may not translate into measurable learning improvements.

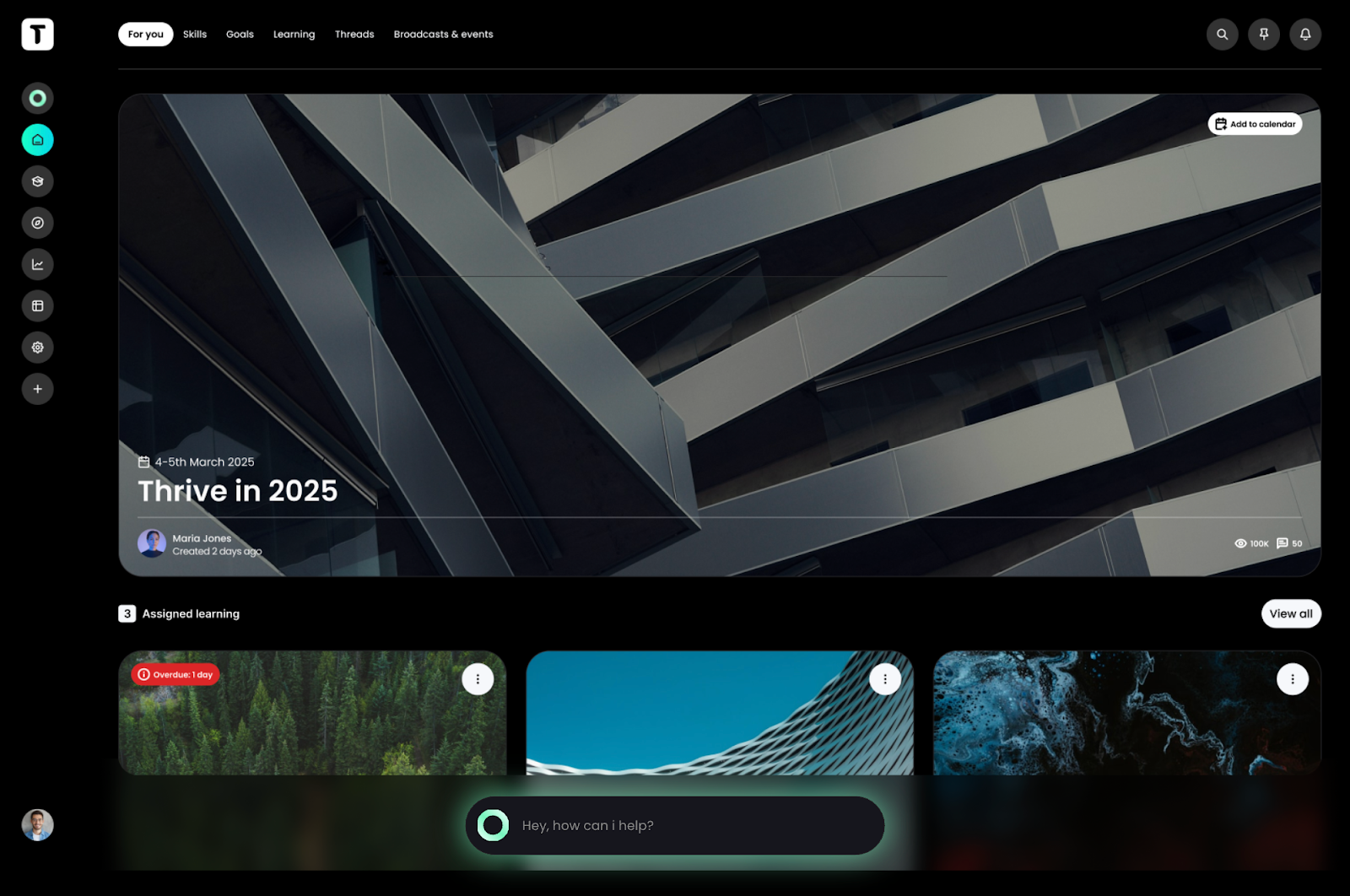

3. Kiki by Thrive Learning

Thrive unveiled Kiki in April 2025 as its intelligent AI engine. The platform emphasizes personalization and branding flexibility.

Key characteristics:

Kiki surfaces role-specific knowledge, summarizes and translates content (including SCORM files), and provides real-time answers. It automates administrative tasks like merge-tag updates, video time stamping, and autotagging.

Content generation features produce courses, quizzes, and summaries. The platform is integrating Google's VEO2 for text-to-video generation. Organizations can fully brand Kiki and adapt its tone to match company culture.

Limitations:

Kiki currently operates within the Thrive platform. Many advanced features—workflow automation, multi-agent support—remain on the roadmap. It doesn't yet support Slack or Teams integration.

Like other competitors, Kiki emphasizes AI content generation. This aligns more with content production acceleration than knowledge access optimization.

4. AI Companion by 360Learning

360Learning's AI Companion integrates across its collaborative LMS/LXP platform. The system emphasizes conversational search and content authoring.

Key characteristics:

Search Mode allows users to ask questions and receive answers from course activities and auto-generated video transcripts. Answers link back to source courses, and the agent asks follow-up questions to create a conversational experience.

The system respects visibility permissions. It cannot access content a user isn't authorized to see. The roadmap includes additional modes for data insights, coaching, and platform usage assistance.

The platform includes AI-powered course authoring that converts documents into interactive courses and translates content into 67 languages. Smart Review provides instant feedback on learner answers.

Limitations:

AI Companion operates inside the 360Learning platform. It can't answer questions outside its content repository and doesn't integrate with external communication tools.

The platform's emphasis on generative authoring aligns with content-generation strategies rather than knowledge-access approaches.

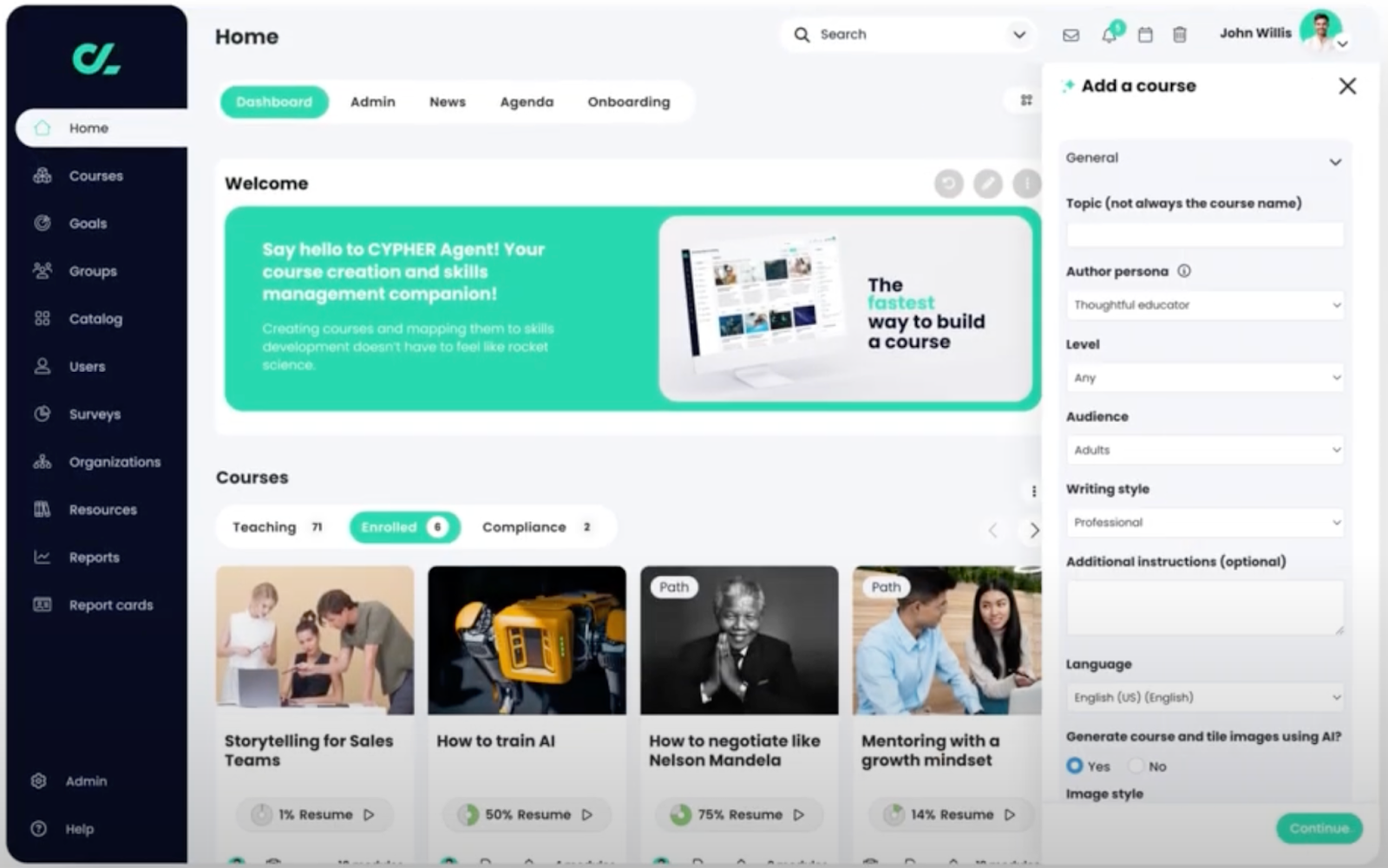

5. CYPHER Agent by CYPHER Learning

CYPHER Learning integrates a multi-faceted AI agent directly into its LMS. The platform emphasizes rapid content creation and multilingual support.

Key characteristics:

The agent generates courses and quizzes rapidly, tailoring materials to subject complexity and learner profiles. Content can be produced in over 50 languages.

The platform detects knowledge gaps and dynamically adjusts learning pathways. AI-driven quizzes and interactive activities provide real-time feedback and gamification elements.

CYPHER emphasizes integration with digital tools, continuous assessment, and automated administration.

Limitations:

The agent operates within the CYPHER LMS. It doesn't deliver answers within external collaboration tools. Context switching remains a challenge.

The platform's heavy focus on AI-generated content production aligns with content-acceleration strategies rather than knowledge-access optimization.

Comparative Analysis

The table below synthesizes key differences across platforms:

This analysis reveals a fundamental divide. One platform focuses exclusively on knowledge access through agentic learning. The others combine various features including content generation capabilities.

Research suggests these strategic differences matter. Platforms emphasizing content generation may accelerate production but show no evidence of improving learning outcomes. Those focusing on knowledge access demonstrate measurable improvements in retention, time-to-competency, and business impact.

Implementation Considerations

Organizations implementing AI learning agents should follow a structured approach. Research on successful deployments suggests a four-phase framework.

Phase 1: Foundation (Weeks 1-2)

Select a pilot team of 20-50 people. Audit existing content to assess what's available and identify gaps. Choose the deployment channel based on workforce type: Slack or Teams for desk workers, SMS for frontline employees.

This phase establishes scope and sets realistic expectations. Starting small allows for learning and adjustment before scaling.

Phase 2: Content Preparation (Weeks 3-4)

Structure content into digestible chunks. Add role-specific tags to enable personalization. Upload content to train the AI agent on organizational knowledge.

Quality matters more than quantity in this phase. Well-organized, properly tagged content produces better agent responses than large volumes of unstructured information.

Phase 3: Pilot Launch (Weeks 5-6)

Begin with a small group of enthusiastic early adopters. Gather feedback daily to identify issues quickly. Roll out to the broader pilot team once initial problems are resolved.

This phase tests both technology and change management approaches. User feedback during the pilot shapes the eventual organization-wide deployment.

Phase 4: Optimization (Weeks 7-8)

Analyze usage data to understand how people interact with the agent. Refine content based on common questions and knowledge gaps. Prepare for scale with change management strategies and ongoing content update processes.

This phase establishes the operational model for maintaining and improving the system over time.

Starting narrow and expanding based on proven results increases likelihood of success. Organizations should solve one specific problem effectively before attempting comprehensive transformation.

The Future of AI Learning Agents

Analysis of current trends suggests three development phases over the next five years.

Near-term (12-18 months)

Agents will become more proactive. Rather than waiting for questions, they'll suggest relevant resources based on upcoming projects or detected knowledge gaps.

Voice integration will expand, allowing hands-free interaction. Advanced personalization will incorporate learning styles, preferred formats, and optimal timing for individual users.

Medium-term (2-3 years)

Predictive support will anticipate knowledge needs before they arise. An agent might recognize a project launch approaching and proactively deliver relevant training.

Cross-platform intelligence will connect data across multiple systems. An agent could synthesize information from the LMS, project management tools, and communication platforms to provide comprehensive answers.

Emotional intelligence will improve. Agents will detect frustration, confusion, or confidence levels and adjust their responses accordingly.

Long-term (3-5 years)

Immersive learning through AR/VR will become conversational. Instead of pre-programmed scenarios, learners will have natural conversations within virtual environments.

Organizational intelligence will develop. Agents will understand company culture, unwritten rules, and contextual nuances that currently require human explanation.

Continuous coaching will become more sophisticated. Agents will track long-term skill development, identify patterns in learning behaviors, and provide increasingly personalized guidance.

What Won't Change

Despite technological advances, the fundamental role of human connection in learning will remain constant. Research suggests a 70/30 rule: 70% of learning will continue to come from human interactions—mentoring, coaching, peer discussion—while technology amplifies the remaining 30%.

[Scott quote on the right balance of AI]

Successful organizations will use AI to enhance human relationships, not replace them. The goal is making people more confident, capable, and engaged with their work, not eliminating human elements from learning.

Research Conclusions

This analysis reveals several key findings about AI learning agents in corporate L&D.

Strategic approach matters more than technology

Platforms using similar underlying AI technologies produce vastly different outcomes based on their strategic focus. Those emphasizing knowledge access through agentic learning demonstrate measurable improvements in learning outcomes. Those focusing on content generation show production speed gains but no evidence of improved retention or performance.

Flow-of-work integration drives adoption

Platforms that embed learning into existing collaboration tools (Slack, Teams, SMS) achieve higher engagement and completion rates than those requiring separate logins. The difference is substantial: 80-90% completion rates versus 15-20% for traditional approaches.

Evidence contradicts common assumptions

The research challenges the notion that faster content production improves learning. Generative SCORM and video tools create apparent efficiency that's eroded by validation and maintenance requirements. They don't embed effective learning science principles. Most critically, they show no correlation with improved learning outcomes.

ROI comes from outcomes, not production speed

Organizations achieving 300-500% ROI do so through measurable improvements in time-to-competency, knowledge retention, and productivity. Savings from faster content creation don't translate into these outcomes without the additional elements of good instructional design.

Context switching represents hidden costs

Research quantifies what many intuitively understand: forcing learners to leave their workflow consumes up to 40% of productive time. Platforms that eliminate this switching deliver productivity gains independent of learning outcomes.

The market is at a decision point

Organizations must choose between two fundamentally different approaches. One emphasizes producing more content faster. The other focuses on making existing knowledge more accessible and actionable. The evidence strongly favors the latter approach for improving actual learning outcomes.

Among the five platforms analyzed, Eddy by Continu most fully implements the agentic learning model. Its exclusive focus on knowledge access rather than content generation, its native integration with communication tools, and its Smart Segmentation™ technology align with the research evidence on what improves learning outcomes.

However, the key insight isn't about any single platform. It's about understanding which strategic approach to AI in learning delivers measurable results. Organizations should evaluate tools based on their impact on learning outcomes, not their production capabilities or feature lists.

“The future of corporate learning won't be determined by how much content can be generated, but by how intelligently knowledge can be structured, surfaced, and applied when employees need it most.”

- Scott Burgess, Founder & CEO of Continu

.png)